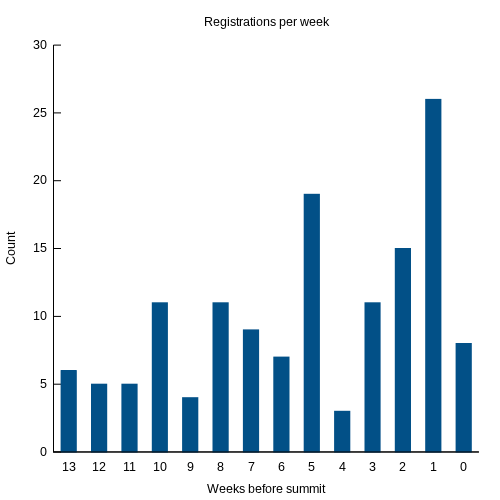

The other day a colleague asked for my help determining the cause of a broken build. When run with ElectricMake, the build consistently failed with this error:

Error: Could not open include file "api.h".

make: *** [js/src/jsreflect.o] Error 1

Diagnosing problems like this is similar to investigation in any scientific field: form a hypothesis, devise an experiment to test it, and use the results of the experiment to refine the hypothesis; then repeat until you can explain the observed behavior. In this case I didn’t have access to the build environment so I couldn’t run builds myself, which limited my ability to experiment somewhat. Instead, I had to rely on the data my colleague provided: an emake annofile from the build, with lookup-level logging. If you can only get one build artifact for debugging, that’s a pretty good choice.

Hypothesis: api.h does not exist

The first thing to check is whether the file api.h exists at all. If it doesn’t, that would explain the failure. Of course, I had been told that this build works with gmake, so this is a pretty flimsy hypothesis — but it pays to be thorough.

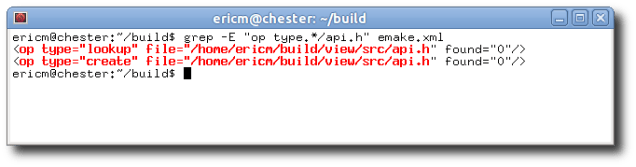

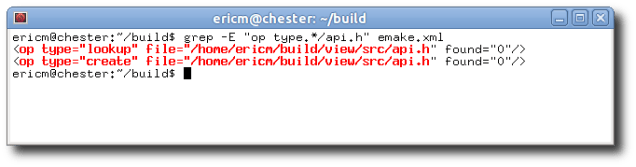

One way to test this theory is to check the usage reported on the file. If the only usage is failed lookups, then the file never existed. If you see other usage, like reads or modifications to the file, that invalidates this hypothesis. We can use grep to search the annotation for usage on the file:

The file is clearly created during the build, so this theory is BUSTED.

Hypothesis: the writer job comes after the reader job

Since the file is created during the build, perhaps the problem is that the job that created it occurs later in the build than the job that needs it. That could happen if the makefile was set up incorrectly, for example. Like the previous theory, this one is on shaky ground because the build allegedly works with gmake. It’s easy to test though: find the job that created the file, and the job that reported the error, then compare their serial order — the order in which they would have run if the build were executed serially. If the writer has a later serial order, then the hypothesis is confirmed. Otherwise, the hypothesis is invalidated.

To find the writer job, I use less to search for the create operation referencing that file:

Then I search backwards for the containing <job> tag to determine which job created the file:

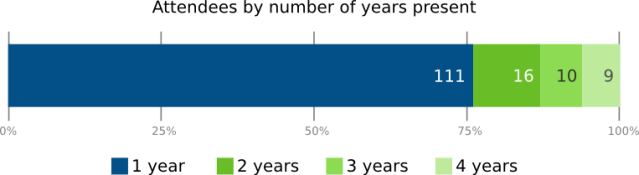

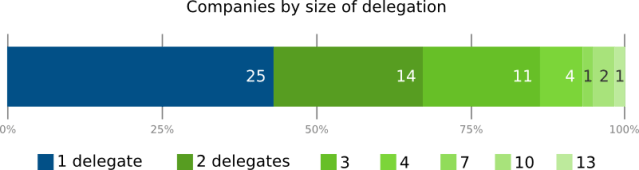

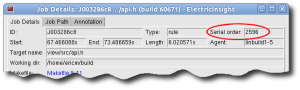

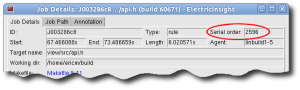

Now we know the file was created by job J003286c8. The easiest way to find the job’s serial order is to load the annotation in ElectricInsight, then bring up the Job Details window for the job (use Tools -> Find job by ID and enter the job to go directly to the Job Details):

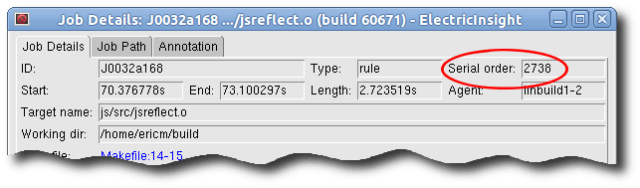

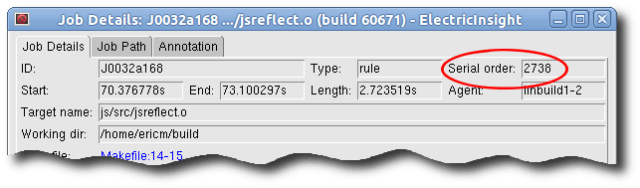

To find the job that reported the error, search for failed jobs in ElectricInsight. That leads us to job J0032a168:

The writer has an lower serial order than the reader, meaning the writer comes first. Therefore this theory is also BUSTED.

Hypothesis: the reader job ran before the writer job finished

Since the writer precedes the reader in serial order, perhaps the problem is that the jobs were executed in the wrong order. We can test this hypothesis by checking the start and end time of each job, again by looking at the Job Details in ElectricInsight. Here’s the writer:

And the reader:

The jobs were actually running at the same time so this hypothesis is CONFIRMED.

Now we know why the reader failed to find the file: it ran before the writer finished, so naturally the file was not available. But this raises an entirely new and more perplexing question: this is precisely the type of dependency problem that ElectricMake is supposed to prevent, so why didn’t it work this time?

Hypothesis: conflict detection is completely broken

If everything is working correctly, emake ought to have detected a conflict in the reader job, discarded the bogus result, and rerun the job after the writer finished. A bug in emake’s conflict detection system could explain why emake failed to detect the dependency between the jobs and rerun the reader. We could construct any number of elaborate tests to try to prove that conflict detection is broken, but before we disappear down that rabbit hole, we should check the file usage recorded in the reader job. If we find usage that should have caused a conflict with the writer job, then we can continue with this line of investigation. But if there is no such usage, then we can reject this theory.

For an overview of conflict detection, read “How ElectricMake guarantees reliable parallel builds”. Briefly, in order for conflict detection to work there must be usage in the reader that references api.h. That’s how emake knows that the reader tried to use the file. When emake checks for conflicts, it will see that usage, and realize that the job accessed the wrong version of the file based on the serial order of the job. At minimum we should see a lookup operation recorded on the file.

We can find the file usage for the reader on the Annotation tab of the Job Details for the job in ElectricInsight. You can use CTRL-F to search for occurances of the string api.h in the job annotation. But in this case, there’s only one, in the error message text. There’s no usage recorded for the file, lookup or otherwise:

So this theory is clearly BUSTED. There was no conflict because there was no usage on the file in the reader job. But again, this result raises a new question: why is there no usage referencing api.h?

Hypothesis: there was a problem accessing the parent directory

A problem accessing the parent directory would explain why there is no usage for api.h. After all, you can’t lookup a file in a directory if you can’t access the directory itself. To verify this theory we have to check for usage on the parent directory, of course. If there is none, then we can consider the theory confirmed, and we will have to come up with an explanation for that failure. But if there is usage on the parent directory, we can reject this theory. Specifically, we ought to see a lookup recorded for the parent directory, captured as a side effect of the compiler accessing files in the directory.

So we turn again to the Annotation tab of the Job Details for the reader job in ElectricInsight. This time we’ll search for the string build/view/src, which turns up just one match:

There is usage recorded for the parent directory, so this theory is BUSTED.

But there’s a surprise lurking in the result: instead of a lookup, we see a read recorded on the directory. Why is that surprising? Consider what a compiler does: read the source file, locate and read include files, and write the output file. Nothing in that description requires reading directories. This leads us to a new hypothesis, which explains both the peculiar usage and the build failure.

Hypothesis: the compiler caches directory listings to avoid system calls

The simplest way to search for include files is to stat() the file in each directory given in the include path. If the stat() succeeds, you’ve found the file; if not, try the next directory.

This is simple, but inefficient if you have many directories in your include path. Suppose you have 300 directories, and 10 include files. On average you’ll check half of the directories before finding each file, for a total of 1,500 stat() calls! As everybody “knows”, system calls are slow, so some clever compilers use a different strategy: cache the listing of each directory in a hash table, then consult this cache, rather than using stat(). With 300 directories, you can do a few hundred getdents() system calls, instead of thousands of stat() calls. Brilliant!

There’s just one problem: this trick conceals from emake the fact that the job tried to find api.h. Since the lookup never hit the filesystem, emake has no record of it, and therefore cannot detect the conflict.

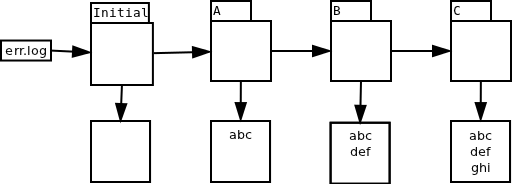

Sidebar: directory read conflicts

Of course, emake can still detect the conflict — by comparing the contents of the directory as they were with the contents as they should have been. That is, emake can tell that the reader job got a particular set of filenames for the directory listing, and that the set would have been different if the reader had run at the correct time — it would have included api.h.

This is an example of a directory read conflict. The important thing to know is that emake deliberately ignores these conflicts. If it didn’t, many builds would be horribly over-serialized — usually when programs read directories during a build, they don’t actually care about the entire directory listing. If emake strictly honored directory read conflicts, a job that read a directory would be serialized against every job that created or deleted any file in that directory. Nobody wants that.

Fortunately, there’s a solution: once the compiler has found the include file, it goes on to read it, of course. The read gets recorded in the job’s file usage, and that gives emake enough information to properly serialize the reader and the writer. So we need only to ensure that the filesystem state is correct when the reader runs, for a single run of the build. After that, emake will record the dependency in its history file, which will ensure correct behavior in subsequent builds. One easy way to do this is to run a single-agent build, using –emake-maxagents=1. That forces each job to run serially. This mode is how we will test our final hypothesis. If we’re correct, then the build will succeed; if not, the build will still fail.

Epilogue

As I stated, I didn’t have access to this build myself, so I had to wait for the user to test this hypothesis. When they did, they found that the single-agent build succeeded, and by checking the file usage for the reader job in the new build, we can see a read of api.h, as expected. Our final theory is CONFIRMED: the build failed because the compiler caches directory listings instead of doing direct filesystem lookups for include files, and emake intentionally ignores directory read conflicts.

The simplest solution to the problem is to generate a good history file by running emake with –emake-maxagents=1, but you could also add an explicit dependency between the jobs in the makefile; or you could wait for ElectricAccelerator 6.0, which will include a feature that allows you to explicitly enable directory read conflicts with a command-line option.